I was a kid in the 80s and I’ve got a real soft spot for physical video games in cabinets. Aside from the game, there’s big chonky buttons, and glowing marquee art. 10 year old me was already very well acquainted with the concept of “coin return slots”. Finding a quarter in a coin return could make your whole week in 1980. The first game I ever came across was probably a PacMan in the Quinte Mall (probably pretty close to the Sneaky Pete’s)1.

MAME Bezel Art

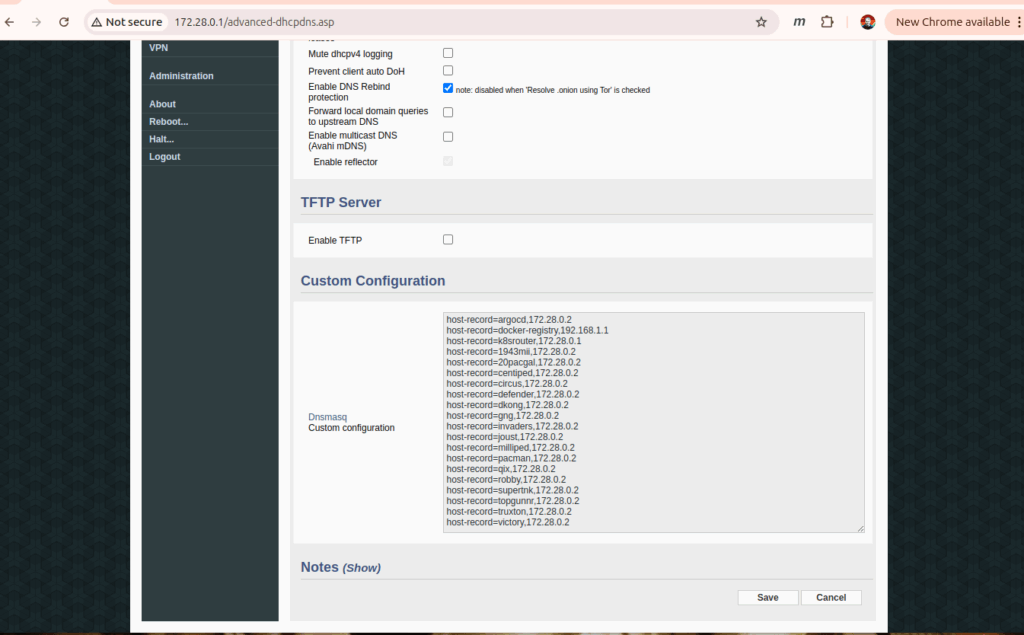

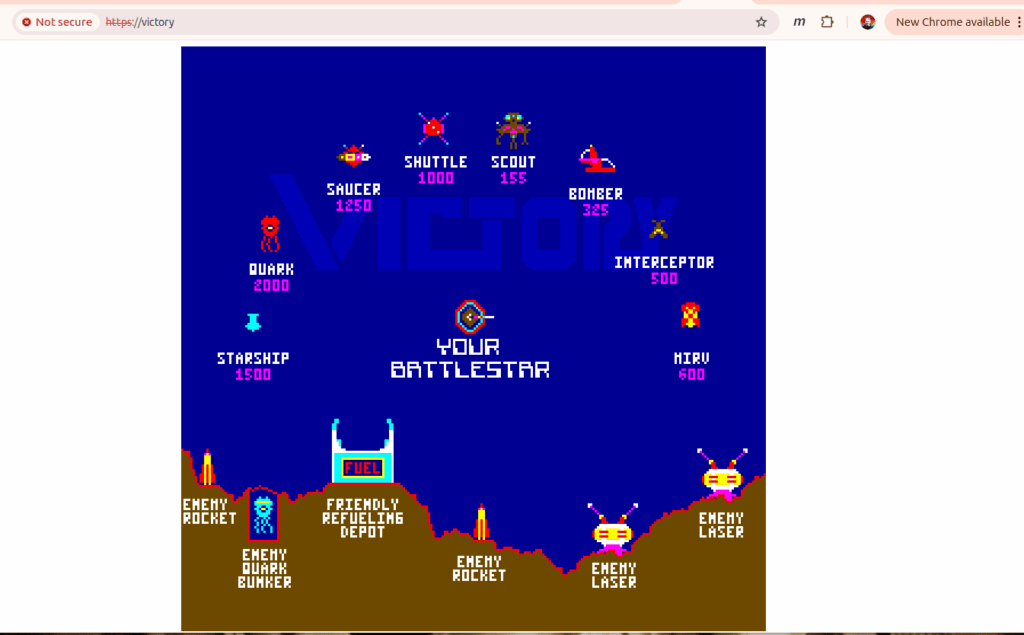

I didn’t have retropie running very long before I found the bezel pack. Retro video games were displayed on 4:3 aspect ratio CRT monitors (picture Defender, or Joust). Some games turned the monitor sideways so basically 3:4 (think Centiped or PacMan). Other games (like the Donkey Kong Jr cocktail game) would flip the screen so that two players could sit on either side of the game.

CRT monitors also had a prominent curved front so no matter how tight you fit the monitor in the cabinet there was going to be a gap. That’s where the bezel comes in to cover the gap and make the CRT look flatter. Nothing on the front of a video game goes undecorated so there was usually some bezel art (often with instructions and pricing). Then a flat piece of glass on top.

Modern displays are wider aspect than CRTs (usually 16:9 or 16:10) so there’s some screen real estate on edges of the display for some artwork. Bezel art is a 16:9 aspect ratio (the ones I use are 1920×1080) image (png) with a transparent hole in the middle. The hole, centered in the screen is either 810×1080 or 1440×1080 depending on the expected orientation of the game. So there’s 555px or 240px on either side of the game for artwork.

Marquee Art

Most games also had a marquee. A translucent piece of backlit plexiglass on the top of the machine. The dimensions of the marquee art are less critical. I usually expect an aspect ratio of 32:9. On my retropie setup I display the marquee on the bottom half of a second monitor mounted above the main game monitor.

Arkade Layout

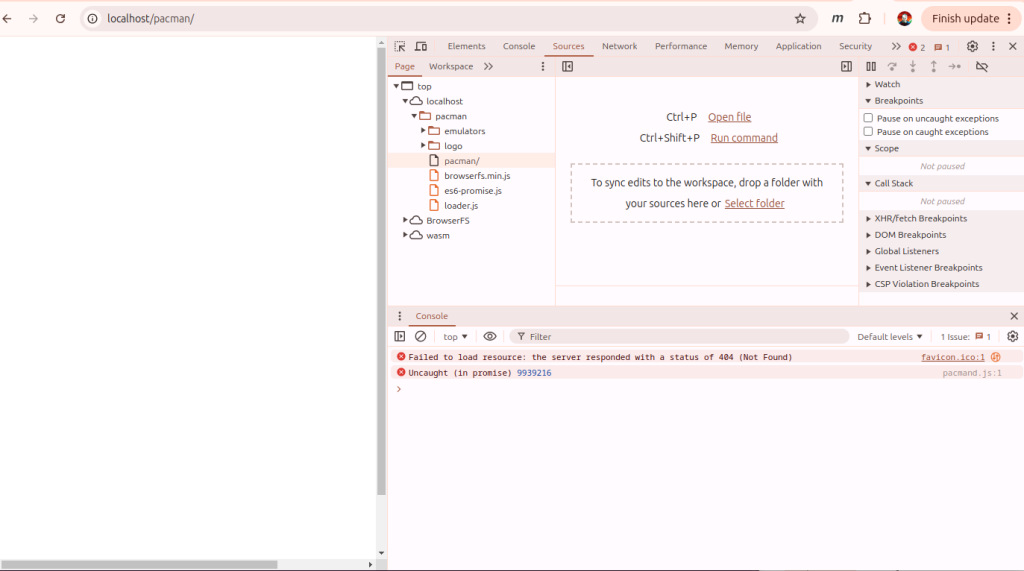

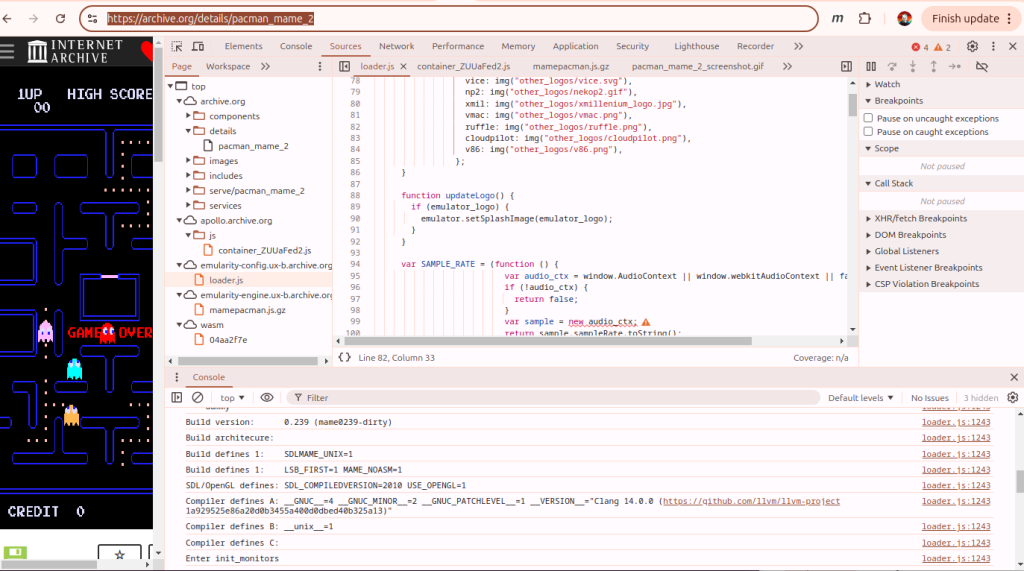

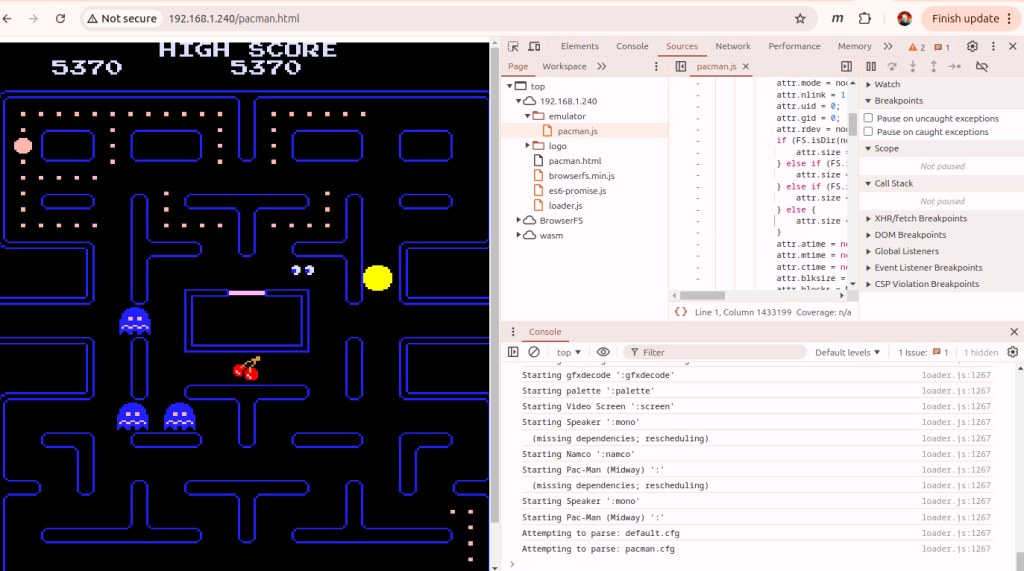

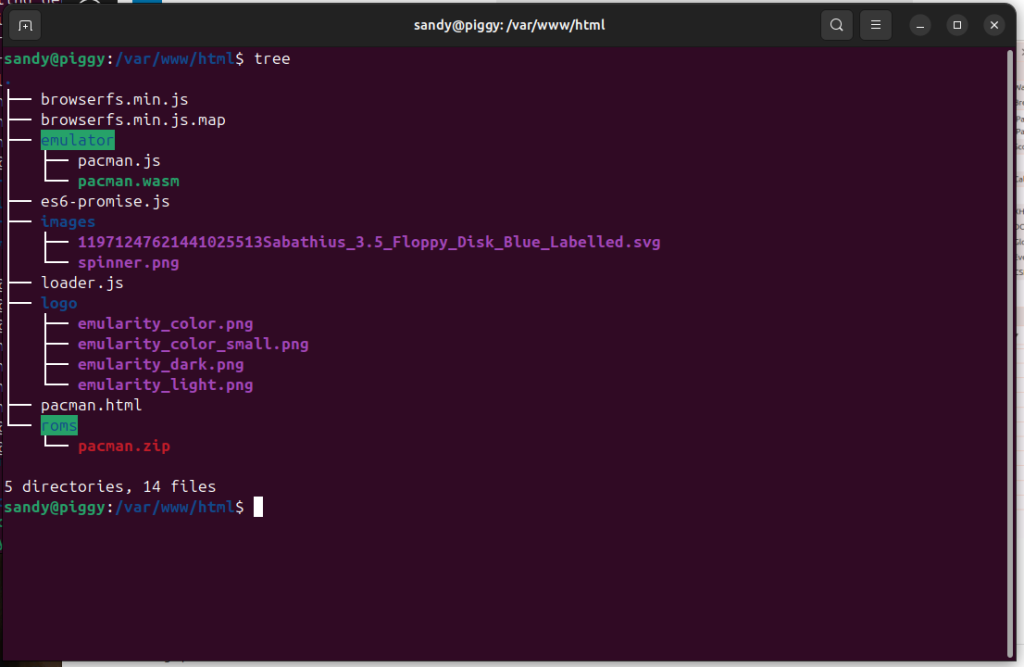

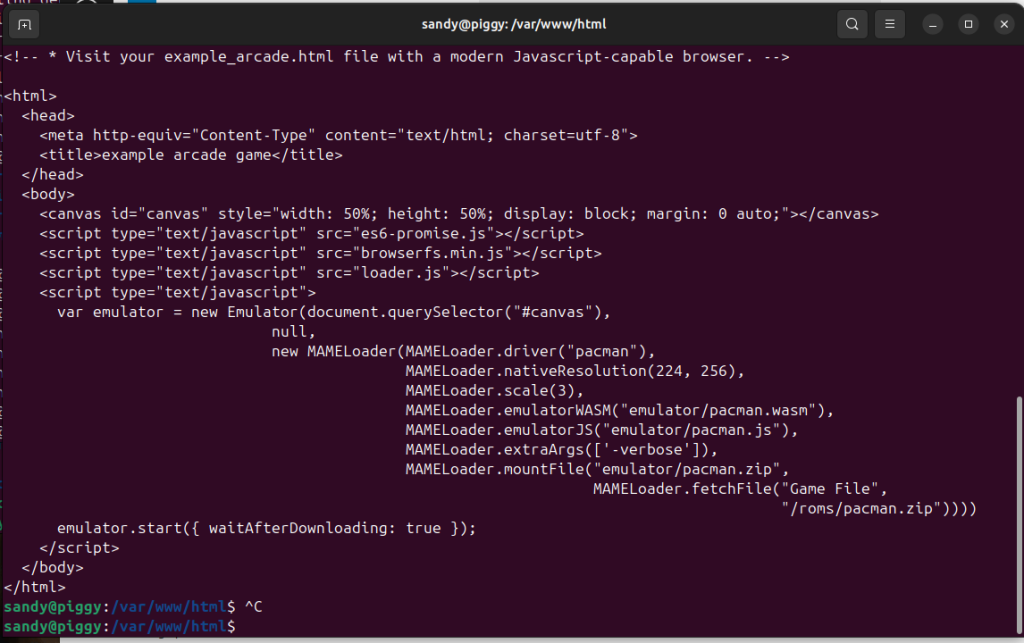

The Emulator object is passed a canvas reference where the game is drawn. When I first created index.html pages for the games, I just used the example code so they look pretty plain. I’d like to add the marquee and bezel art around the MAME canvas sort of like this:

A big goal for this change was to come up with a way to do the layout without touching the loader.js code – ultimately that decision probably made my job easier. I had to learn some style sheet tricks, but didn’t have to get into someone’s javascript code and figure out how to paint on canvases.

HTML For The Layout

This is the HTML to represent the layout:

<div id="wrapper">

<img id="marquee" src="marquee.png" alt="Marquee">

<img id="bezel" src="bezel.png" alt="Bezel">

<div id="emularity">

<canvas id="canvas" style="margin: 0; padding: 0"></canvas>

</div>

</div>

CSS Tricks

The normal layout for those entities would have the game canvas below the bezel, when they really need to overlap. I did that with CSS:

<style>

html, body {

padding: 0; margin: 0;

width: 100vw; height: 100vh;

}

#wrapper {

position: absolute;

width: fit-content;

background-color: black;

padding: 0; margin: 0;

width: 100vw; height: 100vh;

}

#marquee {

width: 100%;

aspect-ratio: 32 / 9;

opacity: 0.8;

padding: 0; margin: 0;

}

#bezel {

position: relative;

pointer-events: none;

z-index: 2;

width: 100%;

aspect-ratio: 16 / 9;

opacity: 0.8;

padding: 0; margin: 0;

}

#emularity {

position: relative;

z-index: 1;

padding: 0; margin: 0;

}

</style>

There’s quite a lot going on there. The bezel art and emularity div are relative position. That lets me position them dynamically (see below) but also gives me a z-index (bezel on top with canvas below). The user input needs to pass through the bezel and get to the canvas (so pointer-events: none). In all this, I’m trying to maximize the display so I zero all the padding and margins. The wrapper paints the background black and I set a little opacity on the images – which helped me troubleshoot the overlap – but now I just like the way it looks.

Game Scale JS

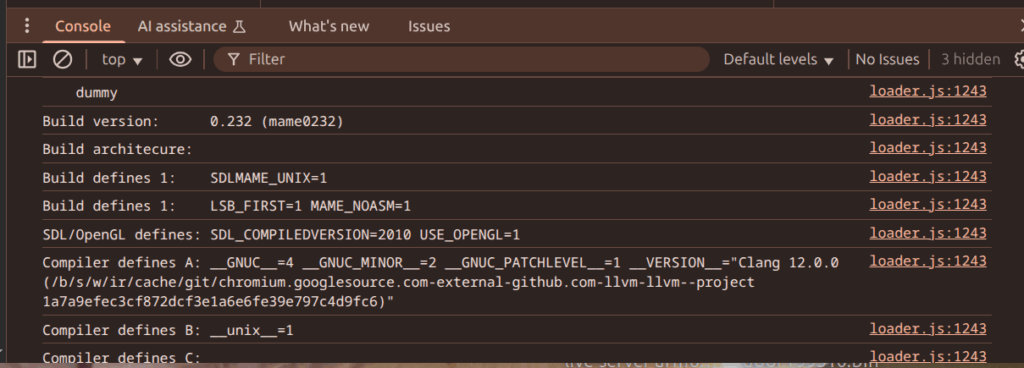

Then there’s a big update to the example script section to scale everything as the emulator is starting up:

<script type="text/javascript">

function game_scale(loader, canvas) {

var wrapper = document.querySelector("#wrapper");

var marquee = document.querySelector("#marquee");

var bezel = document.querySelector("#bezel");

var emularity = document.querySelector("#emularity");

var rotation = 0;

var rotated = ( rotation == 90 || rotation == 270 );

// The Bezel art is 16:9 aspect-ration and has either a 4:3 (horizontal game) or 3:4 (vertical game)

// transparent hole in the middle. The height of the game should be the same as the height of the hole

// (which is just the height of the art).

// The width of the hole depends on the aspect-ratio

// - for e.g. centiped in a 1920x1080 bezel art the height is just 1080 and the width is 3/4*1080

// The actual resolution for centiped doesn't match the aspect-ratio of the gap so the game will be a

// little bit streched to fit the hole...

var game_height = bezel.height;

var game_width = Math.trunc(4.0/3.0 * game_height);

if ( rotated ) {

game_height = bezel.height;

game_width = Math.trunc(3.0/4.0 * game_height);

}

// Tell the loader to draw the game in a canvas that is the computed width x height

// and disable any scaling since those width x height values are computed to fit

// perfectly.

loader.nativeResolution.width = game_width;

loader.nativeResolution.height = game_height;

loader.scale = 1.0;

// The game canvas is inside a div called "emularity".

// Position the div so that it appears in the hole in the bezel art.

// The bezel and emularity are 'position: relative' so that they can overlap *and*

// the emularity div is declared second.

// Set the emularity top value "-bezel.height" so that it moves from below the bezel

// to overlapping.

// The left edge the emularity div is the middle of the bezel minus half the game_width

//

// The wrapper div provides the black background stretch that out to fit the marquee and bezel

emularity.style.height = game_height;

emularity.style.width = game_width;

emularity.style.top = -bezel.height;

emularity.style.left = Math.trunc((bezel.width - game_width)/2.0);

wrapper.style.height = marquee.height + bezel.height;

wrapper.style.width = marquee.width;

emulator.start({ waitAfterDownloading: false });

}

var nr = {width: 352, height: 240 };

var canvas = document.querySelector("#canvas");

var loader = new MAMELoader(MAMELoader.driver("robby"),

MAMELoader.nativeResolution(nr.width, nr.height),

MAMELoader.scale(1.0),

MAMELoader.emulatorWASM("mameastrocde.wasm"),

MAMELoader.emulatorJS("mameastrocde.js"),

MAMELoader.extraArgs(['-verbose']),

MAMELoader.mountFile("robby.zip",

MAMELoader.fetchFile("Game File",

"/roms/robby.zip")));

var emulator = new Emulator(canvas, null, loader);

window.addEventListener('onload', game_scale(loader, canvas));

window.addEventListener('resize', function() { location.reload(true); });

There’s a bunch of “magic” hard-codes in the script above that are actually provided by the MAME meta database. During the build each game has a json summary of it’s meta data the one for robby looks like this:

sandy@www:~/arkade$ more build/robby/robby.json

{

"name": "robby",

"description": "The Adventures of Robby Roto!",

"sourcefile": "midway/astrocde.cpp",

"sourcestub": "astrocde",

"year": "1981",

"manufacturer": "Dave Nutting Associates / Bally Midway",

"players": "2",

"type": "joy",

"buttons": "1",

"ways": "4",

"coins": "3",

"channels": "1",

"rotate": "0",

"height": "240",

"width": "352"

}

MAME can tell you things like the rotation angle of the game, it’s native resolution (which I basically throw-out above). Ultimately the best way to code this up was to decide if the game is 4:3 or 3:4, figure out what the bezel art has been scaled to and then make the canvas the size of the hole in the bezel (and jam the scale to 1). The resize listener was a compromise, because I stayed out of the loader code, I can’t really resize the canvas on the fly – the emulator really needs to be restarted to get a new size. I force a reload on resize to recalculate the new canvas size(this restarts the emulator – but who’s really resizing the window mid game).

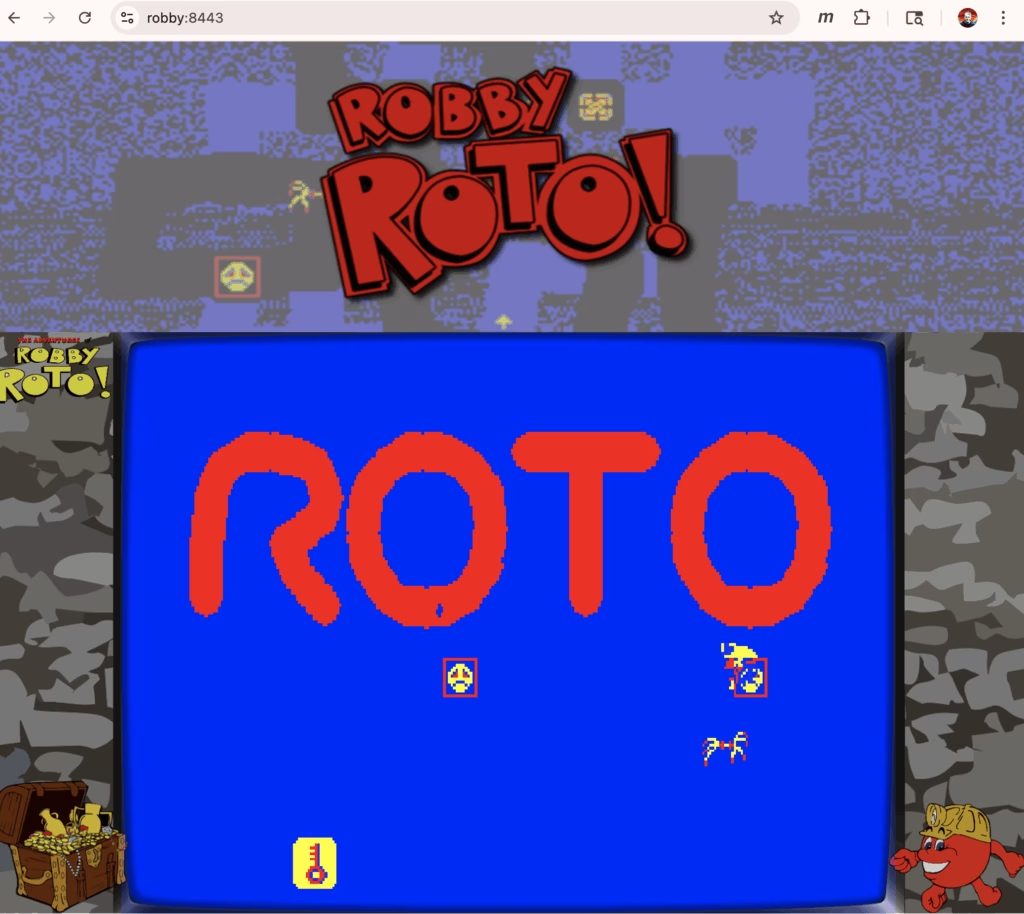

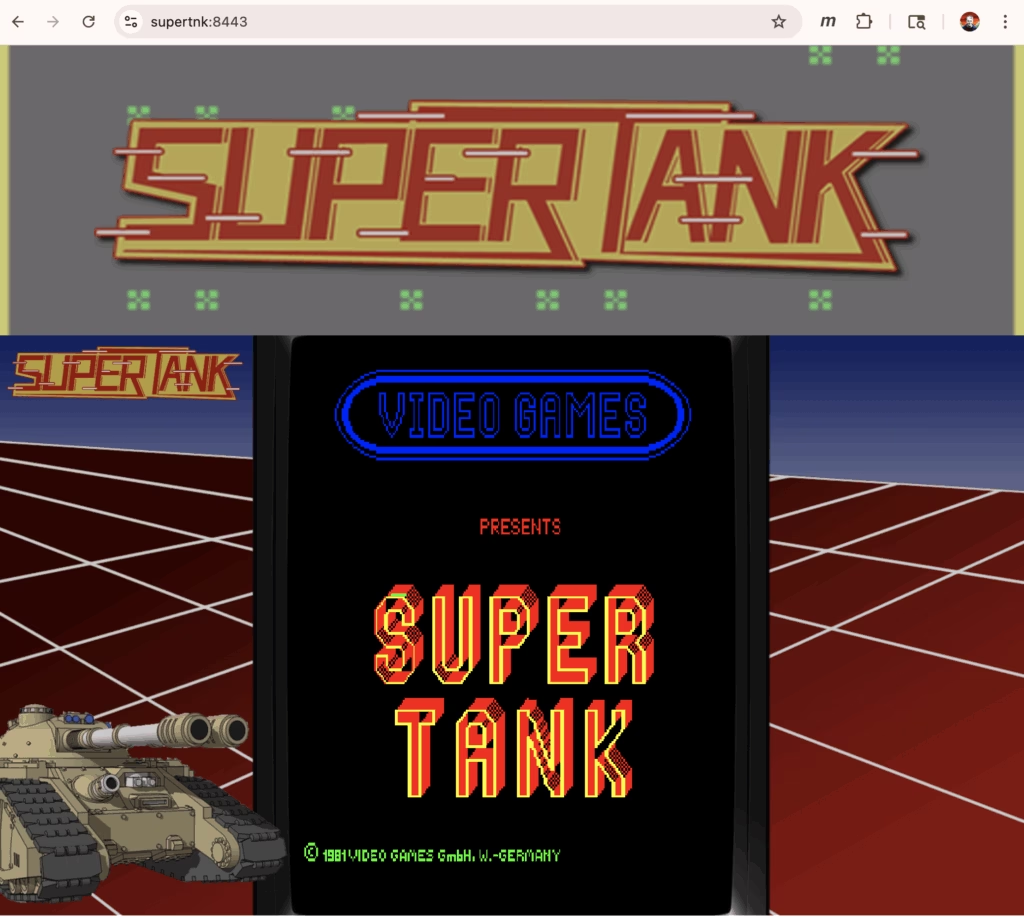

The end result looks like these:

-Sandy

- There was a Battlezone over by the grocery store, and a Missle Command somewhere in there too. Anyway enough “old man reminiscing to clouds”… ↩︎