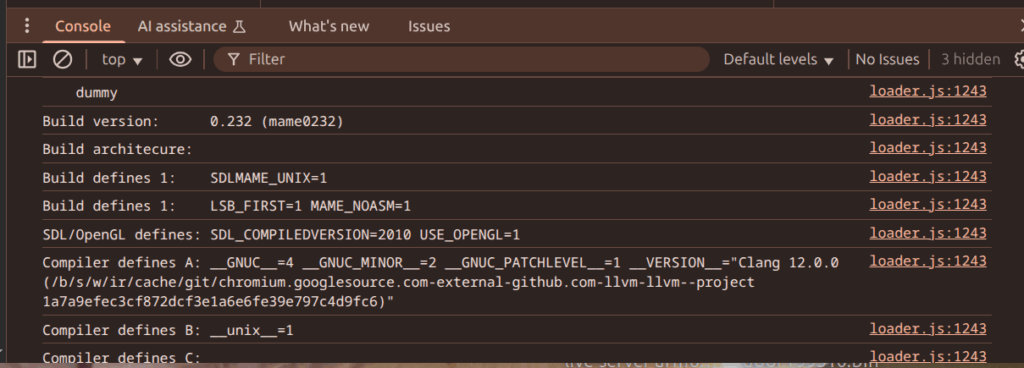

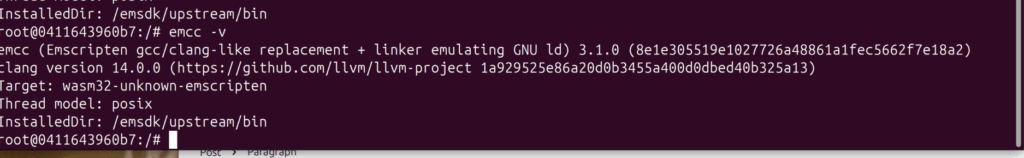

Last entry I got my proof of concept arcade emulator going. I’m calling the project “arkade”. First thing was I setup a new github repo for the project. Last time I’d found that archive.org seems to use MAME version 0.239 and emsdk 3.0.0 – after some more trial and error I found that they actually use emsdk v3.1.0 (based on matching the llvm hash in the version string that gets dumped.

The MAME Builder Image

So I locked that into a Dockerfile:

FROM ubuntu:24.04

ENV EMSDK_VER=3.1.0

ENV MAME_VER=mame0239

RUN apt update \

&& DEBIAN_FRONTEND=noninteractive \

apt -y install git build-essential python3 libsdl2-dev libsdl2-ttf-dev \

libfontconfig-dev libpulse-dev qtbase5-dev qtbase5-dev-tools qtchooser qt5-qmake \

&& apt clean \

&& rm -rf /var/lib/apt/lists/*

# Build up latest copy of mame for -xmllist function

RUN git clone https://github.com/mamedev/mame --depth 1 \

&& make -C /mame -j $(nproc) OPTIMIZE=3 NOWERROR=1 TOOLS=0 REGENIE=1 \

&& install /mame/mame /usr/local/bin \

&& rm -rf /mame

#Setup to build WEBASSEMBLY versions

RUN git clone https://github.com/mamedev/mame --depth 1 --branch $MAME_VER \

&& git clone https://github.com/emscripten-core/emsdk.git \

&& cd emsdk \

&& ./emsdk install $EMSDK_VER \

&& ./emsdk activate $EMSDK_VER

ADD Makefile.docker /Makefile

WORKDIR /

RUN mkdir -p /output

The docker image also has a full build of the latest version of MAME (I’ll use that later). The last command sets up the MAME and Enscripten versions that seemed to work.

The Game Images

That put the tools in place I wanted to move onto building up little nginx docker images one for each arcade machine. To get that all going the image needs a few bits and pieces:

- nginx webserver and basic configuration

- the emularity launcher and support javascript

- the emulator javascript (emulator.js and emulator.wasm)

- the arcade rom to playback in the emulator

That collection of stuff looks like this as a Dockerfile:

FROM arm64v8/nginx

ADD nginx/default /etc/nginx/conf.d/default.conf

RUN mkdir -p /var/www/html /var/www/html/roms

ADD build/{name}/* /var/www/html

Couple things to see here. I’m using the arm64v8 version of nginx because I’m gonna want to run the images in my pi cluster. The system will buildup a set of files in build/{name} where name is the name of the game.

So I setup a Makefile that creates the build/<game> directory populated with all the bits and pieces. There’s a collection of meta data needed to render a game:

- The name of the game

- The emulator for the game

- the

width x heightof the game

MAME can actually output all kinds of metadata about the games it emulates. To get access to that, I build the full version of the emulator binary so that I can run that mame -listxml <gamelist>. There’s a target in the Makefile that runs mame on the small list of games and outputs a file called list.xml. From that, there’s a couple python scripts that parse down the xml to use the metadata.

Ultimately the build directory for a game looks something like this:

sandy@www:~/arkade/build$ tree joust joust ├── browserfs.min.js ├── browserfs.min.js.map ├── es6-promise.js ├── images │ ├── 11971247621441025513Sabathius_3.5_Floppy_Disk_Blue_Labelled.svg │ └── spinner.png ├── index.html ├── joust.json ├── loader.js ├── logo │ ├── emularity_color.png │ ├── emularity_color_small.png │ ├── emularity_dark.png │ └── emularity_light.png ├── mamewilliams.js └── mamewilliams.wasm 3 directories, 14 files

And the index.html file looks like this:

sandy@www:~/arkade/build/joust$ cat index.html

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=utf-8">

<title>Joust (Green label)</title>

</head>

<body>

<canvas id="canvas" style="width: 50%; height: 50%; display: block; margin: 0 auto;"></canvas>

<script type="text/javascript" src="es6-promise.js"></script>

<script type="text/javascript" src="browserfs.min.js"></script>

<script type="text/javascript" src="loader.js"></script>

<script type="text/javascript">

var emulator =

new Emulator(document.querySelector("#canvas"),

null,

new MAMELoader(MAMELoader.driver("joust"),

MAMELoader.nativeResolution(292, 240),

MAMELoader.scale(3),

MAMELoader.emulatorWASM("mamewilliams.wasm"),

MAMELoader.emulatorJS("mamewilliams.js"),

MAMELoader.extraArgs(['-verbose']),

MAMELoader.mountFile("joust.zip",

MAMELoader.fetchFile("Game File",

"/roms/joust.zip"))))

emulator.start({ waitAfterDownloading: true });

</script>

</body>

</html>

The metadata shows up here in a few ways. The <title> field in the index.html is based on the game description. The nativeResolution is a function of the game display “rotation” and width/height. Pulling that information from the metadata helps get the game viewport size and aspect ratio correct. The name of the game is used to set the driver and rom name. There’s a separate driver field in the metadata which is actually the emulator name. For instance in this example, joust is a game you emulate with the williams emulator. Critically the emulator name is used to set the SOURCE= line in the mame build for the emulator.

There’s a m->n relationship between emulator and game (e.g. defender is also a williams game). That mapping is handled using jq and the build/<game>/<game>.json files.

Once the build directory is populated, it’s time to build the docker images. There’s a gamedocker.py script that writes a Dockerfile.<game> for each game. After that, it runs docker buildx build --platform linux/arm64... to build up the images.

Docker Multiplatform and Add a Registry

I do the building on my 8-core amd/64 server so I needed to do a couple things to get the images over to my pi cluster. First I had to setup docker multiplatform builds:

docker run --privileged --rm tonistiigi/binfmt --install all

I also setup a small private docker registry using this docker-compose file

sandy@www:/sim/registry$ ls

config data docker-compose.yaml

sandy@www:/sim/registry$ cat docker-compose.yaml

version: '3.3'

services:

registry:

container_name: registry

restart: always

image: registry:latest

ports:

- 5000:5000

volumes:

- ./config/config.yml:/etc/docker/registry/config.yml:ro

- ./data:/var/lib/registry:rw

#environment:

#- "STANDALONE=true"

#- "MIRROR_SOURCE=https://registry-1.docker.io"

#- "MIRROR_SOURCE_INDEX=https://index.docker.io"

I also had to reconfigure the nodes in the pi cluster to use an insecure registry. To do that I added this bit to my cloud-init configuration:

write_files:

- path: /etc/cloud/templates/hosts.debian.tmpl

append: true

content: |

192.168.1.1 docker-registry www

- path: /etc/rancher/k3s/registries.yaml

content: |

mirrors:

"docker-registry:5000":

endpoint:

- "http://docker-registry:5000"

configs:

"docker-registry:5000":

tls:

insecure_skip_verify: true

Then I reinstalled all the nodes to pull that change.

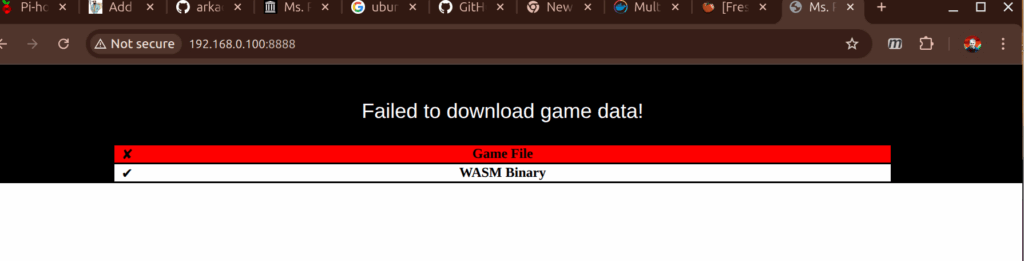

Ultimately, I was able to test it out by creating a little deployment:

kubectl create deployment 20pacgal --image=docker-registry:5000/20pacgal --replicas=1 --port=80

sandy@bunsen:~$ kubectl get pods

NAME READY STATUS RESTARTS AGE

20pacgal-77b777866c-d4dhf 1/1 Running 0 86m

sandy@bunsen:~$ kubectl port-forward --address 0.0.0.0 20pacgal-77b777866c-d4dhf 8888:80

Forwarding from 0.0.0.0:8888 -> 80

Handling connection for 8888

Handling connection for 8888

Almost there. The rom file is still missing – I’ll need to setup a physical volume to hold the roms…next time.

-Sandy

Leave a Reply