In the last post I had built up some Makefiles and docker tooling to create nginx based images to serve the mame emulators. So, now it’s time to deploy the images to the kubernetes cluster using ArgoCD.

Github Actions

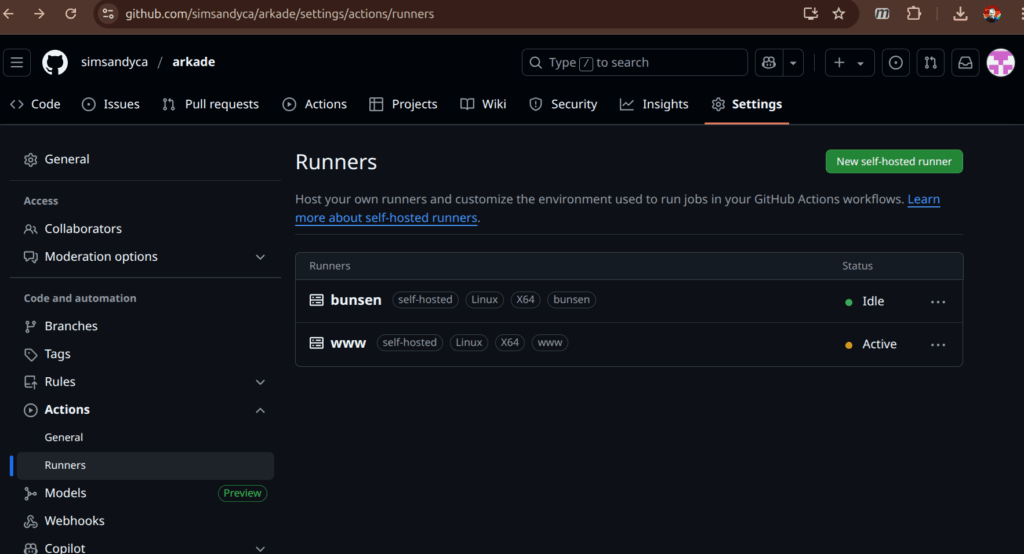

I setup a two self-hosted action runners to use with Github Actions. The action runner on my main server builds the emulators. Then the action runner on the in-cluster thinkpad can run deployments using argocd sync.

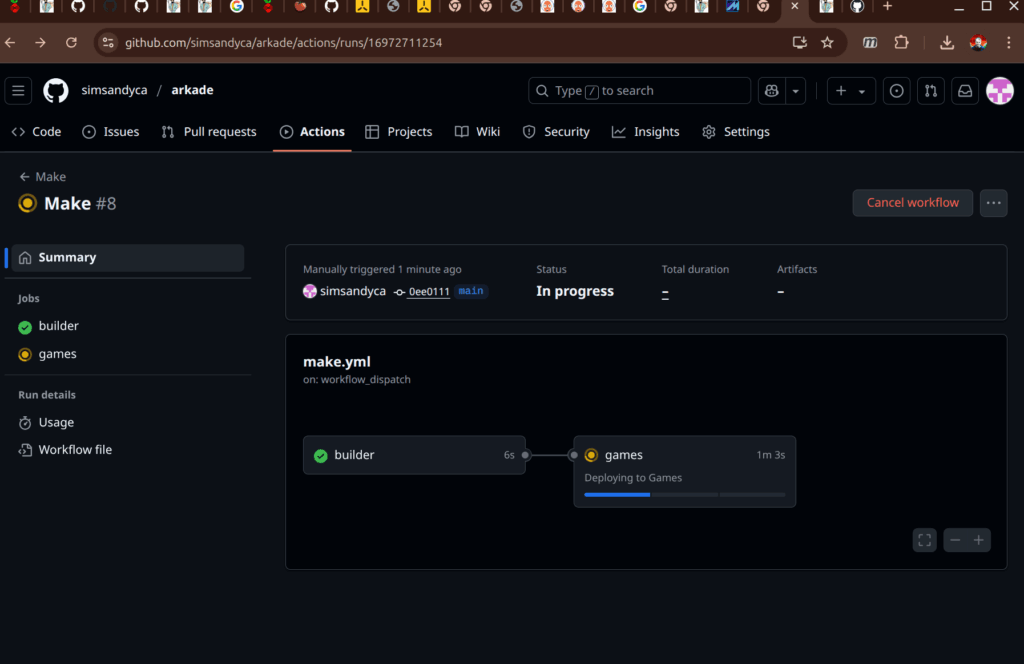

Then I setup a maker workflow and tested out it out.

name: Make

on:

workflow_dispatch:

jobs:

builder:

environment: Games

runs-on: www

steps:

- uses: actions/checkout@v4

- name: Make the mamebuilder

run: |

make mamebuilder

docker tag mamebuilder docker-registry:5000/mamebuilder

docker push docker-registry:5000/mamebuilder

games:

environment: Games

needs: [builder]

runs-on: www

steps:

- uses: actions/checkout@v4

- name: Make the game images

run: |

make

echo done

After triggering the make workflow on github, the build runs and emulator images are pushed to my private docker registry – checking that from inside the cluster’s network shows:

sandy@bunsen:~/arkade/.github/workflows$ curl -X GET http://docker-registry:5000/v2/_catalog

{"repositories":["1943mii","20pacgal","centiped","circus","defender","dkong","gng","invaders","joust","mamebuilder","milliped","pacman","qix","robby","supertnk","tempest","topgunnr","truxton","victory"]}

Helm Chart

Next I worked on a helm chart for the emulators. I created the standard helm chart:

mkdir helm

cd helm

helm create game

On top of the default chart, I added a Traefik IngressRoute next to each deployment and a volume mount for the /var/www/html/roms directory. The final layout is like this:

sandy@bunsen:~/arkade$ tree helm roms-pvc.yaml

helm

└── game

├── charts

├── Chart.yaml

├── templates

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── hpa.yaml

│ ├── ingressroute.yaml

│ ├── ingress.yaml

│ ├── NOTES.txt

│ ├── serviceaccount.yaml

│ ├── service.yaml

│ └── tests

│ └── test-connection.yaml

└── values.yaml

roms-pvc.yaml [error opening dir]

Most customization is in the values.yaml a diff of that against the default looks like this:

sandy@bunsen:~/arkade/helm/game$ diff values.yaml ~/test/game/

25c25

< create: false

---

> create: true

76,78d75

< ingressroute:

< enabled: true

<

110,113c107,111

< volumes:

< - name: roms

< persistentVolumeClaim:

< claimName: roms

---

> volumes: []

> # - name: foo

> # secret:

> # secretName: mysecret

> # optional: false

116,118c114,117

< volumeMounts:

< - name: roms

< mountPath: /var/www/html/roms

---

> volumeMounts: []

> # - name: foo

> # mountPath: "/etc/foo"

> # readOnly: true

The Traefik IngressRoute object replaces the usual kubernetes ingress. I added a couple targets in the Makefile that can package the chart and deploy all the emulators:

package:

$(HELM) package --version $(CHART_VER) helm/game

install:

@for game in $(GAMES) ; do \

$(HELM) install $$game game-$(CHART_VER).tgz \

--set image.repository="docker-registry:5000/$$game" \

--set image.tag='latest' \

--set fullnameOverride="$$game" \

--create-namespace \

--namespace games ;\

done

upgrade:

@for game in $(GAMES) ; do \

$(HELM) upgrade $$game game-$(CHART_VER).tgz \

--set image.repository="docker-registry:5000/$$game" \

--set image.tag='latest' \

--set fullnameOverride="$$game" \

--namespace games ;\

done

The initial install for all the emulators is make package install. Everything is installed into a namespace called games so it’s relatively to clean up the whole mess with kubect delete ns games. There was one adjustment I had to make in the helm chart…kubernetes enforces RFC 1035 for service names. I was using the game name as the service name, but games like 1943mii don’t conform. So I updated the service definitions in the chart like this: name: svc-{{ include "game.fullname" . }} to get around it.

ArgoCD Setup

ArgoCD is setup in the cluster based on the getting started guide. Ultimately, I made a little script argocd.sh to do the setup on repeat:

#!/bin/bash

ARGOCD_PASSWORD='REDACTED'

kubectl create namespace argocd

kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

sleep 30

kubectl -n argocd patch configmap/argocd-cmd-params-cm \

--type merge \

-p '{"data":{"server.insecure":"true"}}'

kubectl -n argocd apply -f argocd_IngressRoute.yaml

sleep 30

INITIAL_PASSWORD=$(argocd admin initial-password -n argocd 2>/dev/null | awk '{print $1; exit}')

argocd login argocd --username admin --insecure --skip-test-tls --password "${INITIAL_PASSWORD}"

argocd account update-password --account admin --current-password "${INITIAL_PASSWORD}" --new-password "${ARGOCD_PASSWORD}"

That installs ArgoCD into it’s own namespace and sets up an IngressRoute using Traefik. The ConfigMap patch is necessary to avoid redirect loops (Traefik proxies https at the LoadBalancer anyway). Then it goes on and logs in the admin user and updates the password.

Then added a couple more targets to the Makefile

argocd_create:

$(KUBECTL) create ns games || true

$(KUBECTL) apply -f roms-pvc.yaml

@for game in $(GAMES) ; do \

$(ARGOCD) app create $$game \

--repo https://github.com/simsandyca/arkade.git \

--path helm/game \

--dest-server https://kubernetes.default.svc \

--dest-namespace games \

--helm-set image.repository="docker-registry:5000/$$game" \

--helm-set image.tag='latest' \

--helm-set fullnameOverride="$$game" ;\

done

argocd_sync:

@for game in $(GAMES) ; do \

$(ARGOCD) app sync $$game ; \

done

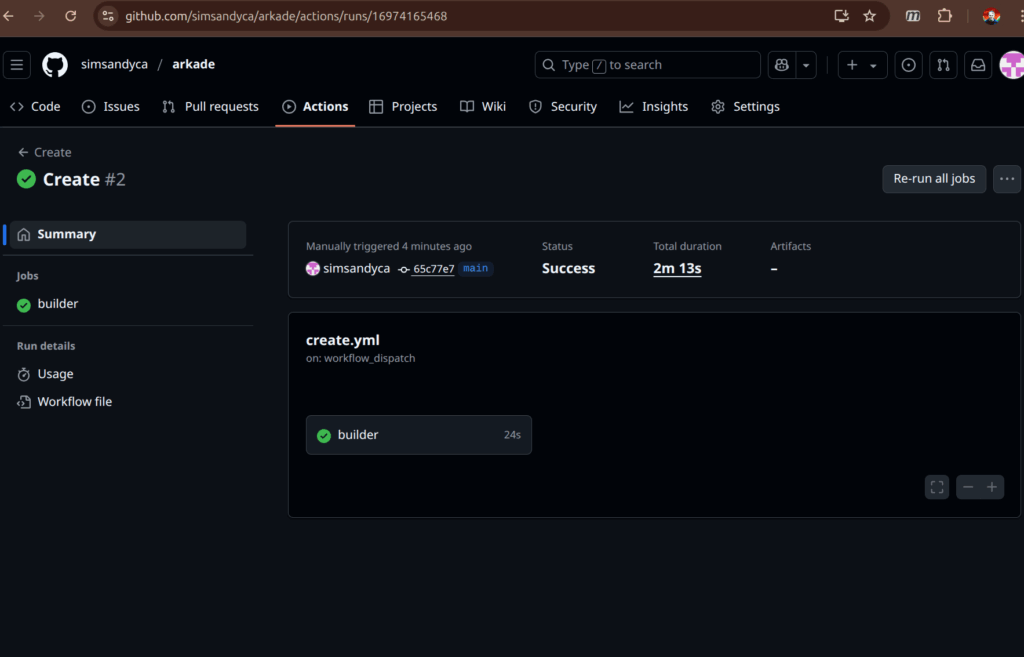

And added a workflow in GitHub actions:

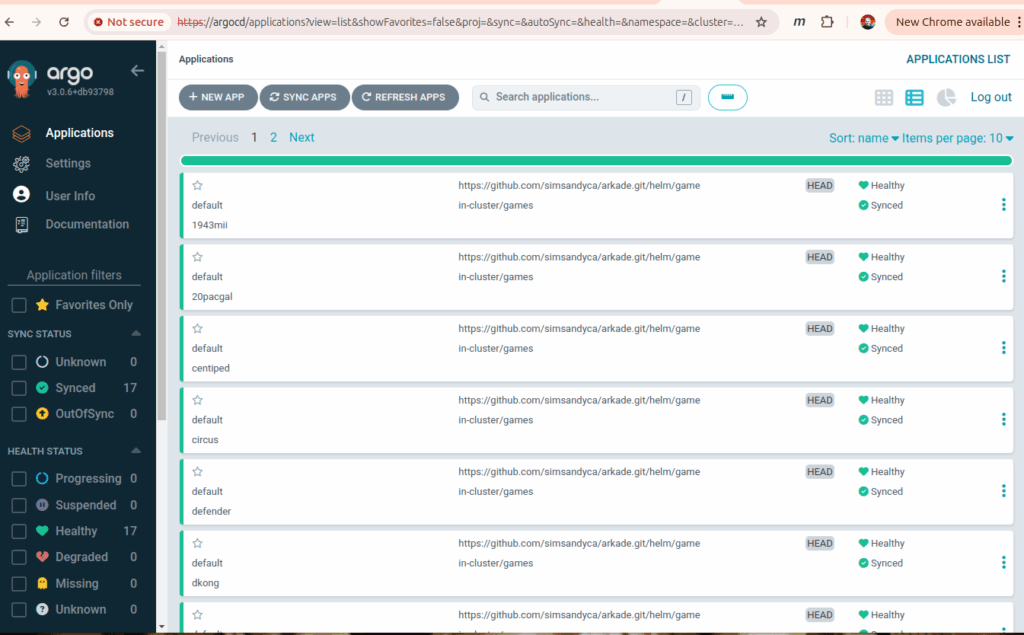

Ultimately I get this dashboard in ArgoCD with all the emulators running.

Sync is manual here, but the beauty of ArgoCD is that it tracks your git repo and can deploy the changes automatically.

Try It Out

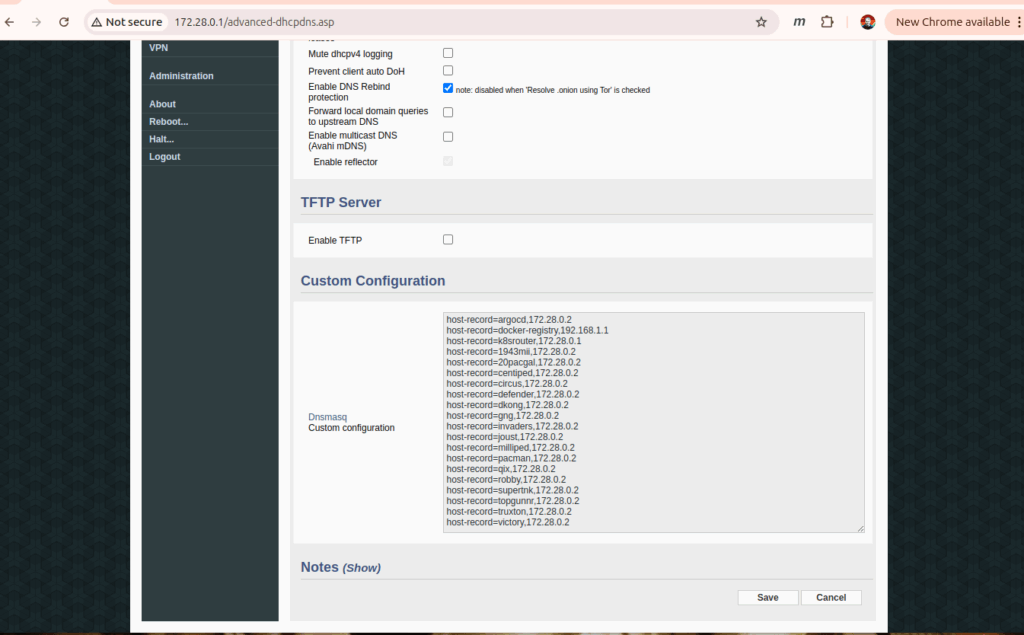

To use the IngressRoute, I need to add DNS entries for each game. The pi-cluster is behind an old ASUS router running FreshTomato so I can add those using dnsmasq host-records:

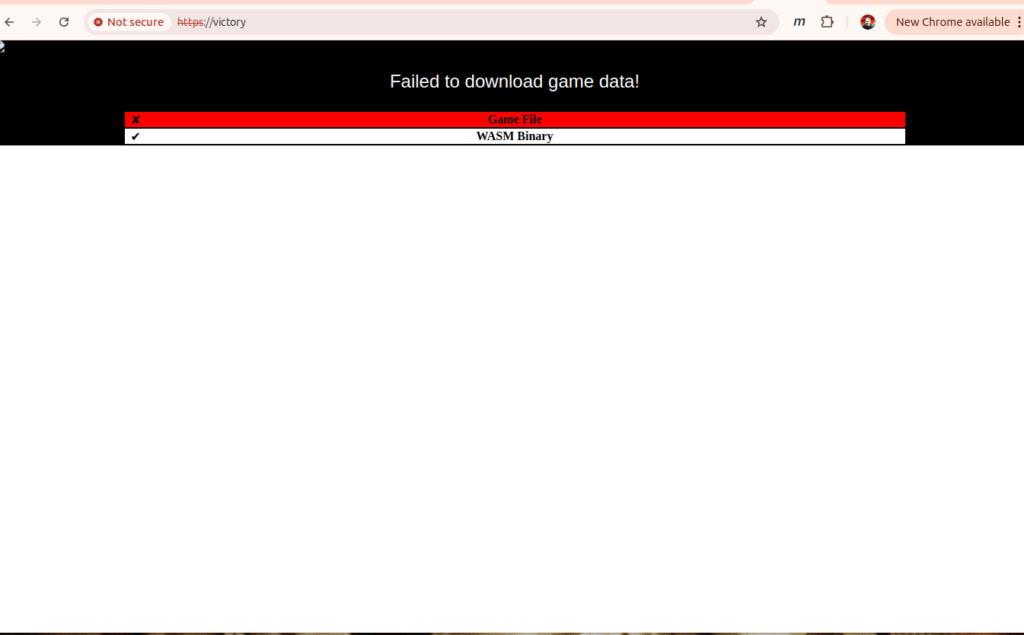

Let’s try loading https://victory

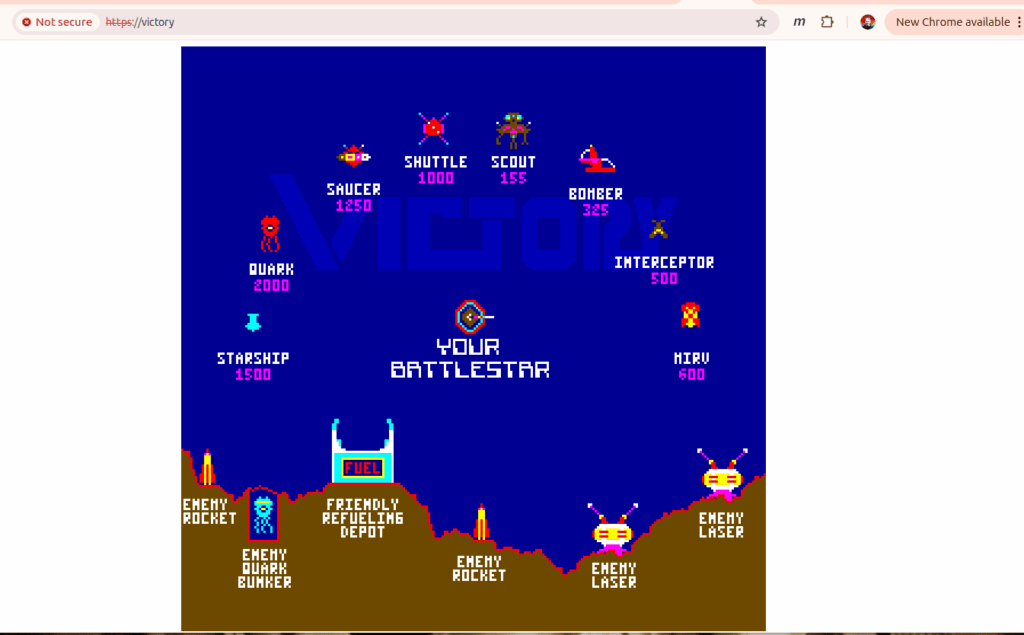

Oh that’s right no game roms yet. To test it out I’d need a royalty free ROM – there’s a few available for personal use on the MAME Project page. To load victory.zip I can do something like this:

sandy@bunsen:~/arkade$ kubectl -n games get pod -l app.kubernetes.io/instance=victory

NAME READY STATUS RESTARTS AGE

victory-5d695d668c-tj7ch 1/1 Running 0 12m

sandy@bunsen:~/arkade$ kubectl -n games cp ~/roms/victory.zip victory-5d695d668c-tj7ch:/var/www/html/roms/@sandy

Then from the bunsen laptop that lives behind the router (+- the self-signed certificate) I can load the https://victory and click the launch button…

On to CI/CD?

So far, this feels like it’s about as far as I can take the Arkade project. Is it full on continuous delivery? No – but this is about all I’d attempt with a personal github account running self-hosted runners. It’s close though – the builds are automated, ArgoCD is configured and watching for changes in the repo. There are github actions in place to run the builds. I’d still need some versioning on the image tags to have rollback targets and release tracking…maybe that’s next

-Sandy

Leave a Reply