This time I’m going to add k6 load testing to the Arkade kubernetes cluster. k6 gives you a javascript interface to setup load testing against a group of webservers. I’m going to try to set it up to test the various game servers in my pi-cluster.

Tools

Install the k6 command line tool. I added this to my tools.sh setup script:

echo "Install k6"

sudo gpg -k

sudo gpg --no-default-keyring --keyring /usr/share/keyrings/k6-archive-keyring.gpg --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys C5AD17C747E3415A3642D57D77C6C491D6AC1D69

echo "deb [signed-by=/usr/share/keyrings/k6-archive-keyring.gpg] https://dl.k6.io/deb stable main" | sudo tee /etc/apt/sources.list.d/k6.list

sudo apt-get update

sudo apt-get install k6

Install The k6-operator

Next I added in the k6-operator in the cluster. That’s done with helm as shown below:

$ cat k6.sh

#/bin/bash

. ./functions.sh

NAMESPACE=k6

info "Setup k6 community helm repo"

helm repo add grafana https://grafana.github.io/helm-charts

helm repo update

info "Install the k6 operator in the '$NAMESPACE' namespace"

helm upgrade --install k6-operator grafana/k6-operator \

--namespace $NAMESPACE \

--create-namespace

The Arkade Test Case

A k6 test case is declared in javascript. Here’s what I came up to drive each of the game servers.

$ cat arkade_loadtest.js

// k6.js

import http from 'k6/http';

import { sleep } from 'k6';

export const options = {

vus: 10,

duration: '30s',

};

export default function () {

const games = ["1943mii","20pacgal","circus","centiped","defender","dkong","gng",

"invaders","joust","milliped","pacman","qix","robby","supertnk",

"topgunnr","truxton","victory"];

games.forEach((game, index) => {

http.get('https://' + game);

});

sleep(1);

}

Test The Test

First thing to try is running the test using the k6 cli.

$ k6 run arkade_loadtest.js

/\ Grafana /‾‾/

/\ / \ |\ __ / /

/ \/ \ | |/ / / ‾‾\

/ \ | ( | (‾) |

/ __________ \ |_|\_\ \_____/

execution: local

script: arkade_loadtest.js

output: -

scenarios: (100.00%) 1 scenario, 10 max VUs, 1m0s max duration (incl. graceful stop):

* default: 10 looping VUs for 30s (gracefulStop: 30s)

█ TOTAL RESULTS

HTTP

http_req_duration..............: avg=3.55ms min=1.88ms med=2.76ms max=41.26ms p(90)=4.65ms p(95)=8ms

{ expected_response:true }...: avg=3.55ms min=1.88ms med=2.76ms max=41.26ms p(90)=4.65ms p(95)=8ms

http_req_failed................: 0.00% 0 out of 4760

http_reqs......................: 4760 157.511476/s

EXECUTION

iteration_duration.............: avg=1.07s min=1.04s med=1.05s max=1.53s p(90)=1.08s p(95)=1.15s

iterations.....................: 280 9.265381/s

vus............................: 10 min=10 max=10

vus_max........................: 10 min=10 max=10

NETWORK

data_received..................: 26 MB 857 kB/s

data_sent......................: 480 kB 16 kB/s

running (0m30.2s), 00/10 VUs, 280 complete and 0 interrupted iterations

default ✓ [======================================] 10 VUs 30s

The test loops over all the game servers requesting the main index page thousands of times.

Run The Test in Kubernetes

Now load the test script into a ConfigMap and create a TestRun object to setup and run it:

kubectl create configmap arkade-loadtest --from-file=./arkade_loadtest.js

$ cat arkade_TestRun.yaml

apiVersion: k6.io/v1alpha1

kind: TestRun

metadata:

name: arkade-testrun

spec:

parallelism: 2

script:

configMap:

name: arkade-loadtest

file: arkade_loadtest.js

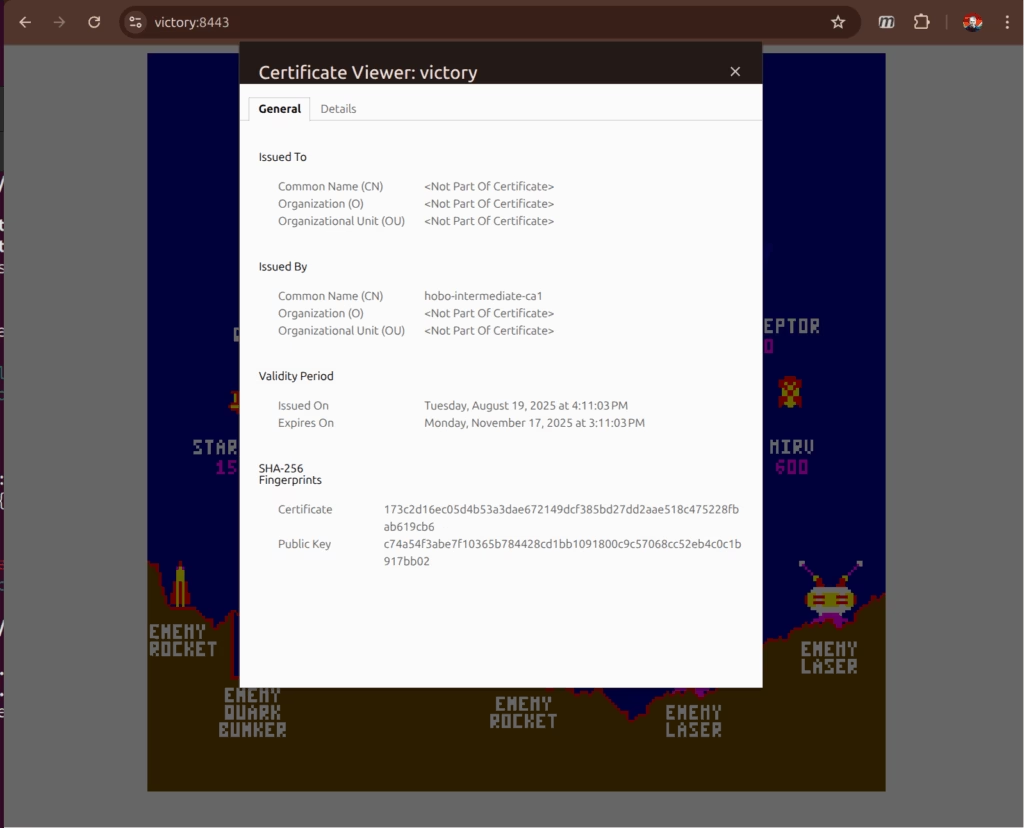

arguments: "--insecure-skip-tls-verify"

$ kubectl apply -f arkade_TestRun.yaml

testrun.k6.io/arkade-testrun created

I had to disable TLS certificate verification to run it in the cluster because the runner pods don’t have access to the self signed certificate.

Now monitor the test run:

$ kubectl get jobs

NAME STATUS COMPLETIONS DURATION AGE

arkade-loadtest-1 Running 0/1 0s 0s

arkade-loadtest-2 Running 0/1 0s 0s

arkade-loadtest-initializer Complete 1/1 7s 7s

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

arkade-loadtest-1-j864z 1/1 Running 0 17s

arkade-loadtest-2-t6r2v 1/1 Running 0 17s

arkade-loadtest-initializer-r5pgt 0/1 Completed 0 24s

arkade-loadtest-starter-pq2mh 0/1 Completed 0 13s

And pull the results:

$ kubectl logs arkade-loadtest-1-j864z

█ TOTAL RESULTS

HTTP

http_req_duration..............: avg=5.6ms min=2.4ms med=4.02ms max=102.11ms p(90)=9.39ms p(95)=12.75ms

{ expected_response:true }...: avg=5.6ms min=2.4ms med=4.02ms max=102.11ms p(90)=9.39ms p(95)=12.75ms

http_req_failed................: 0.00% 0 out of 2312

http_reqs......................: 2312 74.683635/s

EXECUTION

iteration_duration.............: avg=1.12s min=1.05s med=1.07s max=1.62s p(90)=1.2s p(95)=1.25s

iterations.....................: 136 4.393155/s

vus............................: 5 min=0 max=5

vus_max........................: 5 min=5 max=5

NETWORK

data_received..................: 13 MB 406 kB/s

data_sent......................: 234 kB 7.6 kB/s

Run The Load Test Every Hour

Now let’s automate the test run so it goes every hour. I used a kubernetes CronJob to do that. The cronjob uses kubectl to delete/reapply the TestRun object on a schedule:

$ cat k6_CronJob.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: arkade-loadtest-cron

spec:

schedule: "0 * * * *"

concurrencyPolicy: Forbid

jobTemplate:

spec:

template:

spec:

serviceAccount: k6

containers:

- name: kubectl

image: bitnami/kubectl

volumeMounts:

- name: arkade-testrun

mountPath: /tmp/

command:

- /bin/bash

args:

- -c

- 'kubectl delete -f /tmp/arkade_TestRun.yaml; kubectl apply -f /tmp/arkade_TestRun.yaml'

restartPolicy: OnFailure

volumes:

- name: arkade-testrun

configMap:

name: arkade-testrun

The arkade-load-test CronJob depends on a ServiceAccount and two ConfigMaps. The k6 ServiceAccount gives the job permission to run kubectl to delete/apply on the testrun objects. One configmap is the test script contents (like above) and the other is the definition of the TestRun that was applied manually earlier.

The ServiceAccount definition goes like this:

$ kubectl --namespace $NAMESPACE apply -f k6_ServiceAccount.yaml

$ cat k6_ServiceAccount.yaml

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: k6

rules:

- apiGroups:

- k6.io

resources:

- testruns

verbs:

- create

- delete

- get

- list

- patch

- update

- watch

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: k6

roleRef:

kind: Role

name: k6

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: k6

namespace: k6

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: k6

The ConfigMaps are created like this:

$ kubectl --namespace $NAMESPACE create configmap arkade-loadtest --from-file=./arkade_loadtest.js

$ kubectl --namespace $NAMESPACE create configmap arkade-testrun --from-file=./arkade_TestRun.yaml

Test The CronJob

Rather than wait for the top of the hour for the CronJob to fire – just schedule the job manually right now:

$ kubectl -n k6 delete job testrun

job.batch "testrun" deleted

$ kubectl -n k6 create job --from=cronjob/arkade-loadtest-cron testrun

job.batch/testrun created

Then monitor the run:

$ kubectl -n k6 get jobs

NAME STATUS COMPLETIONS DURATION AGE

arkade-loadtest-cron-29286600 Complete 1/1 3m38s 26m

arkade-testrun-1 Running 0/1 16s 16s

arkade-testrun-2 Running 0/1 16s 16s

arkade-testrun-initializer Complete 1/1 6s 21s

arkade-testrun-starter Complete 1/1 8s 12s

testrun Complete 1/1 7s 25s

then pull the results:

$ kubectl -n k6 logs arkade-testrun-1-ft84q

█ TOTAL RESULTS

HTTP

http_req_duration..............: avg=4.49ms min=2.54ms med=3.6ms max=50.77ms p(90)=6.38ms p(95)=9.28ms

{ expected_response:true }...: avg=4.49ms min=2.54ms med=3.6ms max=50.77ms p(90)=6.38ms p(95)=9.28ms

http_req_failed................: 0.00% 0 out of 2380

http_reqs......................: 2380 76.901145/s

EXECUTION

iteration_duration.............: avg=1.1s min=1.05s med=1.07s max=1.61s p(90)=1.14s p(95)=1.15s

iterations.....................: 140 4.523597/s

vus............................: 2 min=0 max=5

vus_max........................: 5 min=5 max=5

NETWORK

data_received..................: 13 MB 418 kB/s

data_sent......................: 240 kB 7.8 kB/s

$ kubectl -n k6 logs arkade-testrun-2-sgc4v

█ TOTAL RESULTS

HTTP

http_req_duration..............: avg=4.68ms min=2.66ms med=3.61ms max=51.45ms p(90)=6.9ms p(95)=9.37ms

{ expected_response:true }...: avg=4.68ms min=2.66ms med=3.61ms max=51.45ms p(90)=6.9ms p(95)=9.37ms

http_req_failed................: 0.00% 0 out of 2329

http_reqs......................: 2329 75.377741/s

EXECUTION

iteration_duration.............: avg=1.1s min=1.05s med=1.07s max=1.65s p(90)=1.15s p(95)=1.25s

iterations.....................: 137 4.433985/s

vus............................: 2 min=0 max=5

vus_max........................: 5 min=5 max=5

NETWORK

data_received..................: 13 MB 410 kB/s

data_sent......................: 236 kB 7.6 kB/s

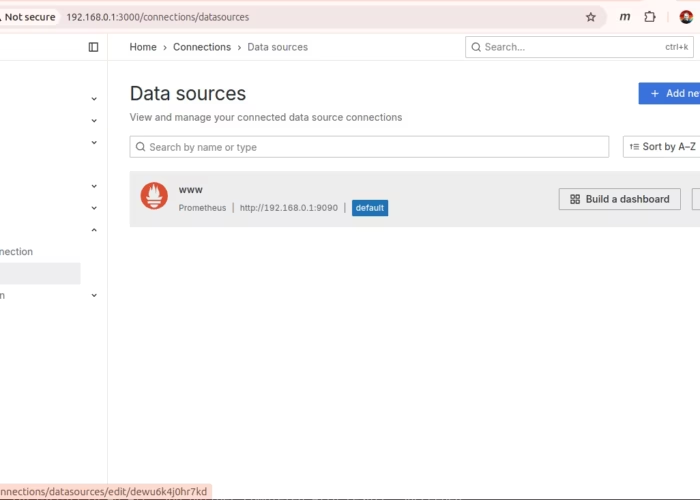

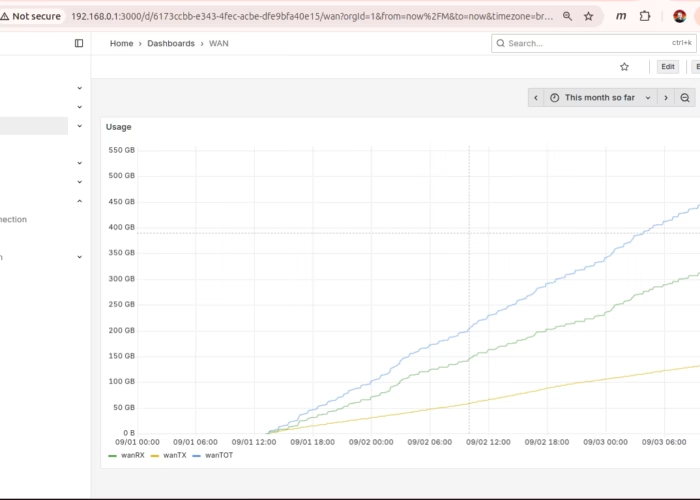

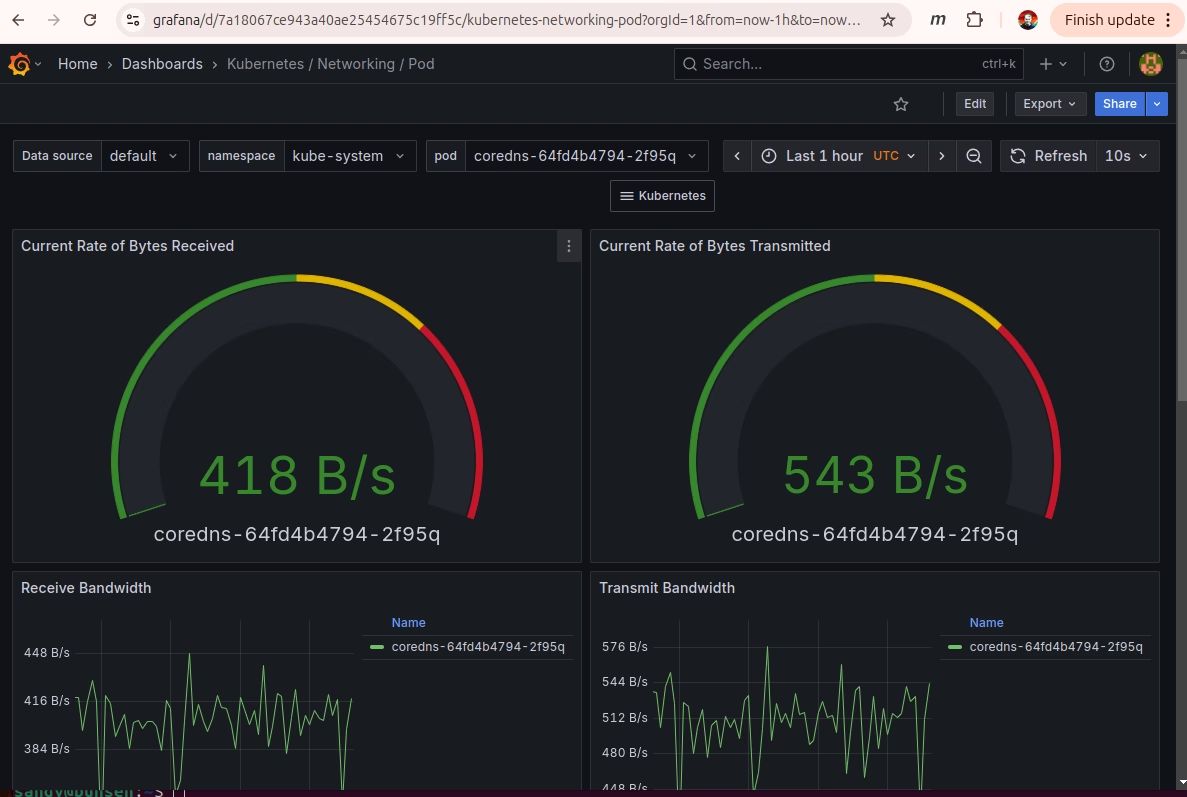

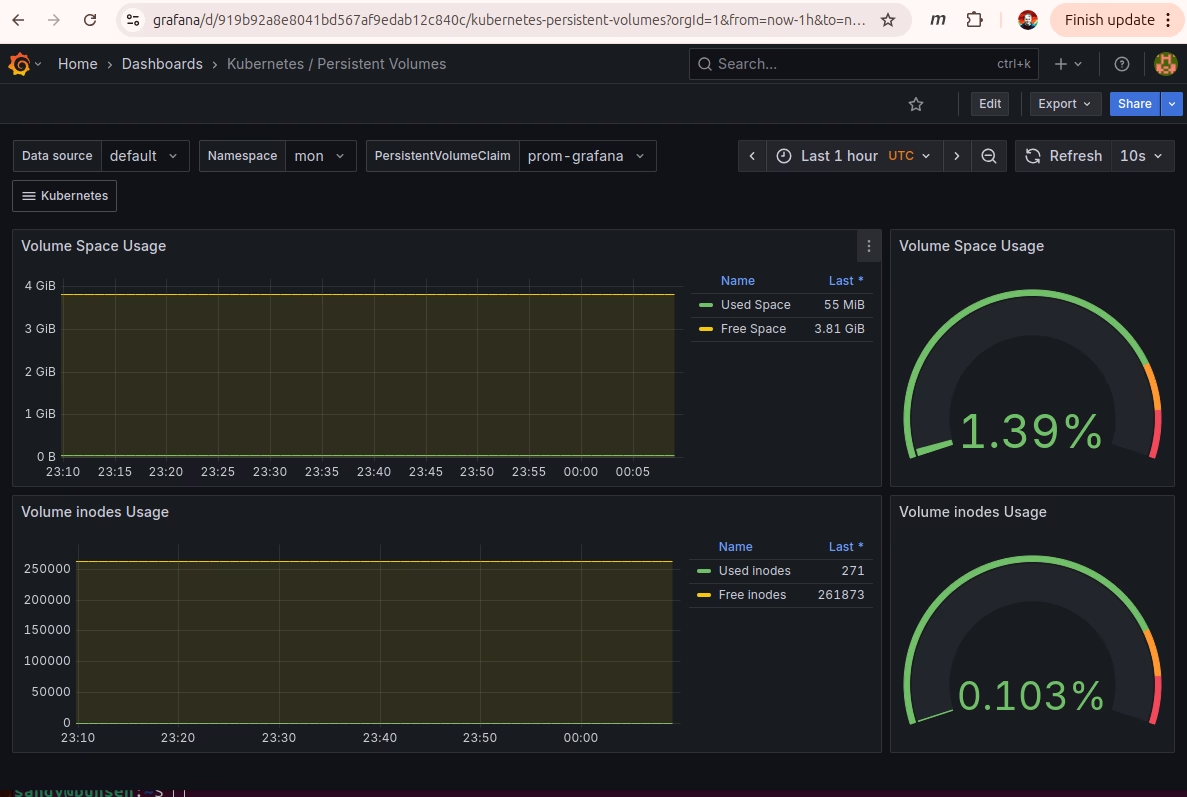

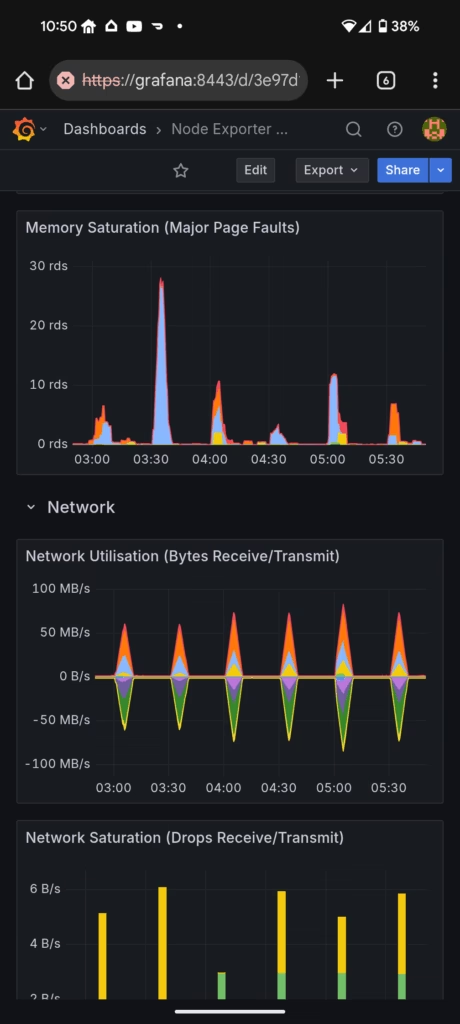

I’ll wait for the hour to tick off and check the test results again. A likely next step would be to review my grafana monitoring setup through a test run and also look into pulling the test results over to my dashboards.

it works! I changed the tests to 10 minutes duration every half hour. Nice sharp traffic peaks!

-Sandy