So there’s a problem in the kubernetes pi-cluster…When I dump a list of pods vs nodes it looks like this:

sandy@bunsen:~/arkade$ kubectl -n games get pods -ojsonpath='{range .items[*]}{.metadata.name},{.spec.nodeName}{"\n"}{end}'

1943mii-b59f897f-tkzzj,node-100000001b5d3ab7

20pacgal-df4b8848c-dmgxj,node-100000001b5d3ab7

centiped-9c676978c-pdhh7,node-100000001b5d3ab7

circus-7c755f8859-m8t7l,node-100000001b5d3ab7

defender-654d5cbfc5-pv7xk,node-100000001b5d3ab7

dkong-5cfb8465c-zbsd6,node-100000001b5d3ab7

gng-6d5c97d9b7-9vvhn,node-100000001b5d3ab7

invaders-76c46cb6f5-mr9pn,node-100000001b5d3ab7

joust-ff654f5b9-c5bnv,node-100000001b5d3ab7

milliped-86bf6ddd95-xphhg,node-100000001b5d3ab7

pacman-559b59df59-9mkvq,node-100000001b5d3ab7

qix-7d5995ff79-cdt4d,node-100000001b5d3ab7

robby-5947cf94b7-w4cfq,node-100000001b5d3ab7

supertnk-5dbbffdf7f-9v4vd,node-100000001b5d3ab7

topgunnr-c8fb7467f-nlvzn,node-100000001b5d3ab7

truxton-76bf94c65f-72hbt,node-100000001b5d3ab7

victory-5d695d668c-d9wth,node-100000001b5d3ab7

All the pods are on the same node! But I’ve got three nodes and a control plane running

sandy@bunsen:~/arkade$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-100000001b5d3ab7 Ready <none> 39m v1.33.3+k3s1

node-100000008d83d984 Ready <none> 33m v1.33.3+k3s1

node-10000000e5fe589d Ready <none> 39m v1.33.3+k3s1

node-6c1a0ae425e8665f Ready control-plane,master 45m v1.33.3+k3s1

Access Mode Trouble

The is a problem with the rom data PersistentVolumeClaim:

sandy@bunsen:~/arkade$ cat roms-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: roms

namespace: games

spec:

accessModes:

- ReadWriteOnce

storageClassName: local-path

resources:

requests:

storage: 128Mi

When I setup the PVC I just used the built-in local-path storage class that comes default with k3s. local-path only supports ReadWriteOnce accessMode which means any pods that want to mount the roms volume have to be placed on the same node.

Trying out Longhorn

So I thought I’d give Longhorn a try. The basic install goes like this:

kubectl apply -f https://raw.githubusercontent.com/longhorn/longhorn/v1.9.1/deploy/longhorn.yaml

There’s a nice frontend user interface for the system so I added a Traefik IngressRoute to get at that (and a host-record for dnsmasq on the cluster’s router).

sandy@bunsen:~/k3s$ more longhorn_IngressRoute.yaml

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: longhorn

namespace: longhorn-system

spec:

entryPoints:

- websecure

routes:

- kind: Rule

match: Host(`longhorn`)

priority: 10

services:

- name: longhorn-frontend

port: 80

tls:

certResolver: default

kubectl apply -f longhorn_IngressRoute.yaml

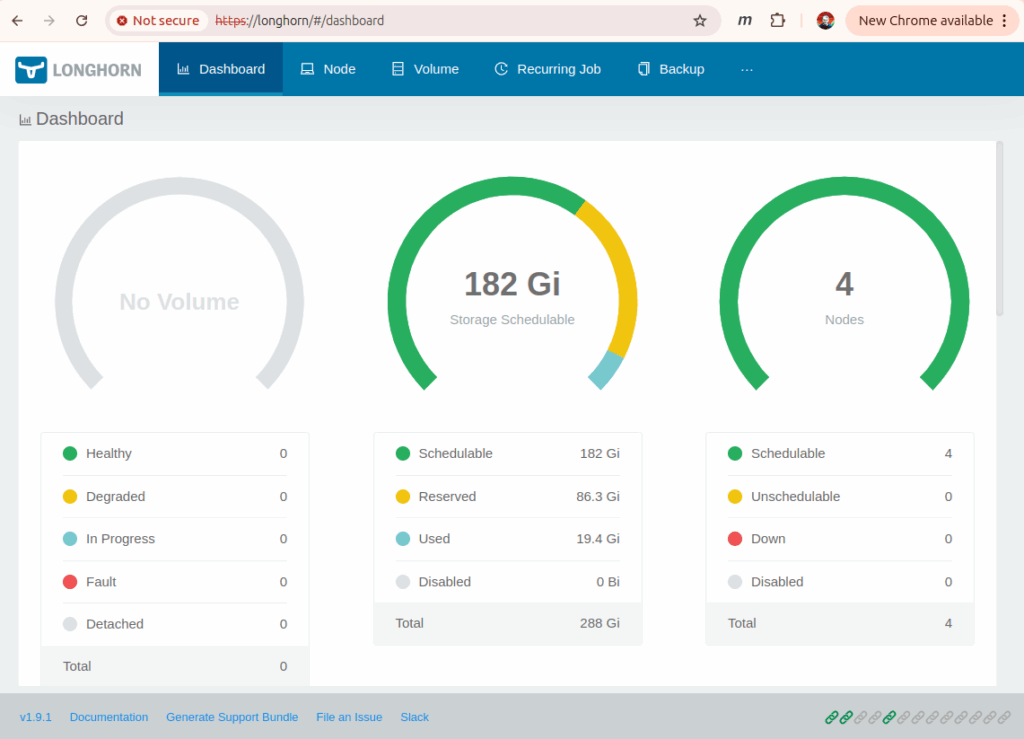

After a little wait the UI was available:

Size Matters

Pretty quickly after that the whole cluster crashed (the picture above was taken just now after I fixed a bunch of stuff).

When I first setup the cluster I’d used micro SD cards like these ones:

After running the cluster for about a week and then adding Longhorn, the file systems on the nodes were pretty full (especially on the control-plane node). Adding the longhorn images put storage pressure on the nodes so that nothing could schedule. So I switched out the micro SD cards (128GB on the control-plane and 64GB on the other nodes). Then I rolled all the nodes to reinstall the OS and expand the storage volumes.

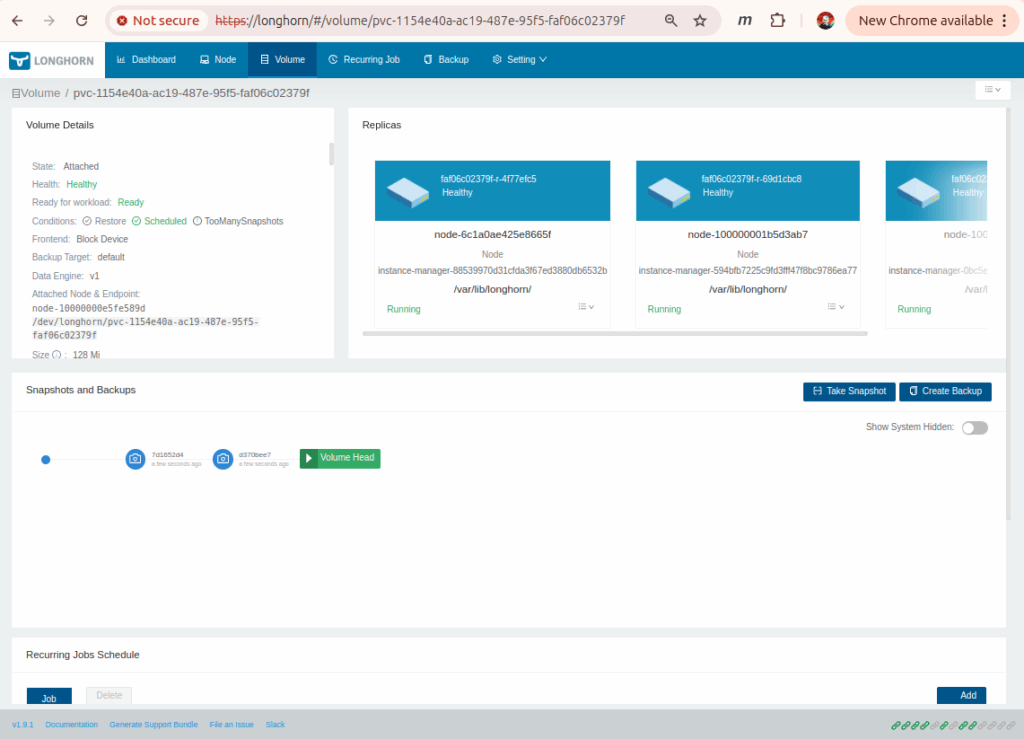

With a more capable storage driver in place, it was time to try updating the PVC definition:

andy@bunsen:~/arkade$ cat roms-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: roms

namespace: games

spec:

accessModes:

- ReadWriteMany

storageClassName: longhorn

resources:

requests:

storage: 128Mi

Here I changed to ReadWriteMany access mode and longhorn storageClassName. Then re-deploy my arkade projects:

make argocd_create argocd_sync

...

sandy@bunsen:~/arkade$ kubectl -n games get pods

NAME READY STATUS RESTARTS AGE

1943mii-b59f897f-rsklf 0/1 ContainerCreating 0 3m30s

...

topgunnr-c8fb7467f-c7hbb 0/1 ContainerCreating 0 3m2s

truxton-76bf94c65f-8vzn4 0/1 ContainerCreating 0 3m

victory-5d695d668c-9wdcv 0/1 ContainerCreating 0 2m59s

Something’s not right the pods aren’t starting…

sandy@bunsen:~/arkade$ kubectl -n games describe pod victory-5d695d668c-9wdcv

Name: victory-5d695d668c-9wdcv

...

Containers:

game:

Container ID:

Image: docker-registry:5000/victory:latest

Image ID:

Port: 80/TCP

Host Port: 0/TCP

State: Waiting

...

Conditions:

Type Status

PodReadyToStartContainers False

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

roms:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: roms

ReadOnly: false

...

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m39s default-scheduler Successfully assigned games/victory-5d695d668c-9wdcv to node-100000001b5d3ab7

Warning FailedAttachVolume 3m11s (x3 over 3m36s) attachdetach-controller AttachVolume.Attach failed for volume "pvc-003e701a-aec0-4ec5-b93e-4c9cc9b25b1c" : CSINode node-100000001b5d3ab7 does not contain driver driver.longhorn.io

Normal SuccessfulAttachVolume 2m38s attachdetach-controller AttachVolume.Attach succeeded for volume "pvc-003e701a-aec0-4ec5-b93e-4c9cc9b25b1c"

Warning FailedMount 2m36s kubelet MountVolume.MountDevice failed for volume "pvc-003e701a-aec0-4ec5-b93e-4c9cc9b25b1c" : rpc error: code = Internal desc = mount failed: exit status 32

Mounting command: /usr/local/sbin/nsmounter

Mounting arguments: mount -t nfs -o vers=4.1,noresvport,timeo=600,retrans=5,softerr 10.43.221.242:/pvc-003e701a-aec0-4ec5-b93e-4c9cc9b25b1c /var/lib/kubelet/plugins/kubernetes.io/csi/driver.longhorn.io/a8647ba9f96bea039a22f898cf70b4284f7c1b8ba30808feb56734de896ec0b8/globalmount

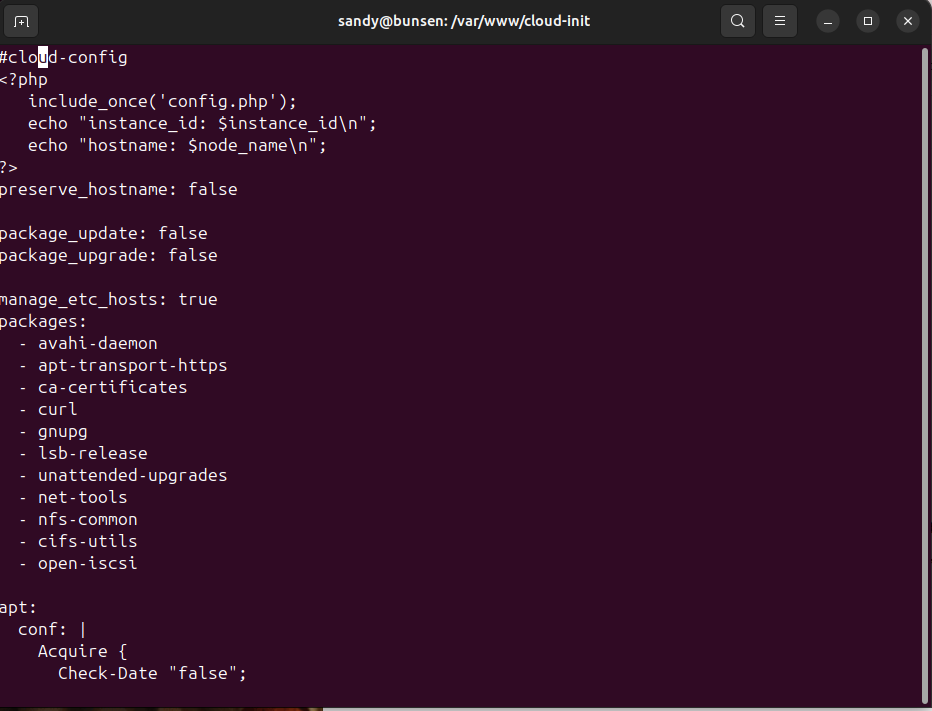

Oh – the NFS mounts are failing. Guess I need to install NFS in the nodes. To do that, I just updated my cloud-init/userdata definition to add the network tool packages:

roll – reinstall – redeploy – repeat…

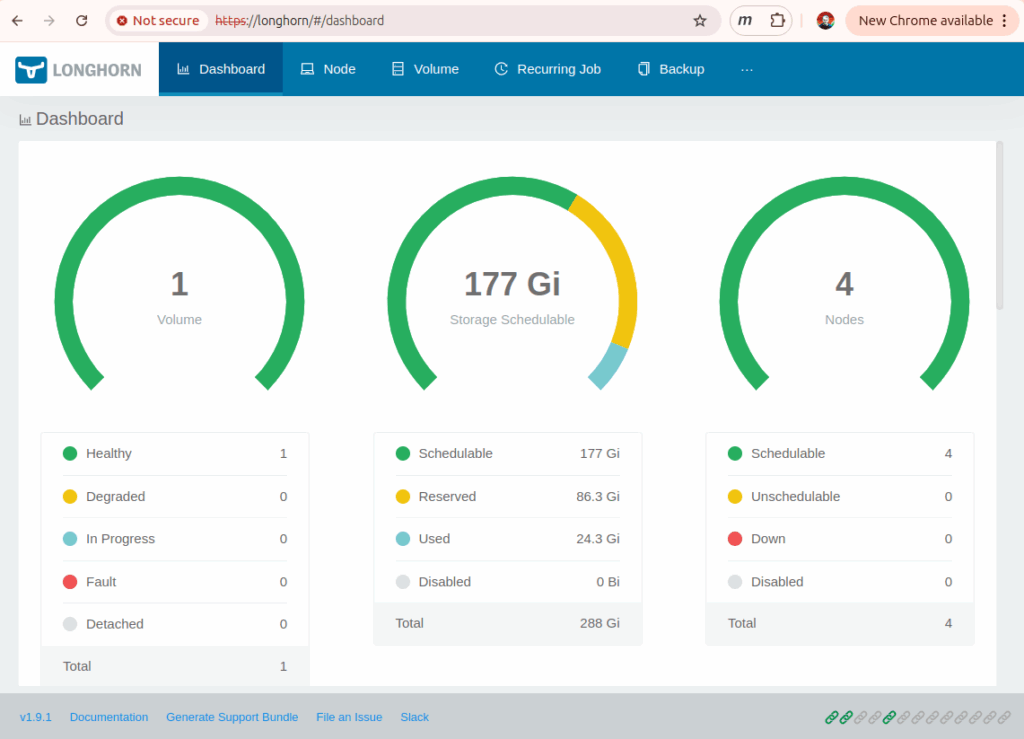

Finally Distributed Storage

sandy@bunsen:~/arkade$ kubectl -n games get pods -ojsonpath='{range .items[*]}{.metadata.name},{.spec.nodeName}{"\n"}{end}'

1943mii-b59f897f-qfb97,node-100000008d83d984

20pacgal-df4b8848c-x2qdm,node-100000001b5d3ab7

centiped-9c676978c-qcgxg,node-100000008d83d984

circus-7c755f8859-s2t87,node-100000001b5d3ab7

defender-654d5cbfc5-7922b,node-10000000e5fe589d

dkong-5cfb8465c-6hnrn,node-100000008d83d984

gng-6d5c97d9b7-7qc9n,node-10000000e5fe589d

invaders-76c46cb6f5-m2x7n,node-100000001b5d3ab7

joust-ff654f5b9-htbrn,node-100000001b5d3ab7

milliped-86bf6ddd95-sq4jt,node-100000008d83d984

pacman-559b59df59-tkwx4,node-10000000e5fe589d

qix-7d5995ff79-s8vxv,node-100000001b5d3ab7

robby-5947cf94b7-k876b,node-100000008d83d984

supertnk-5dbbffdf7f-pn4fw,node-10000000e5fe589d

topgunnr-c8fb7467f-5v5h6,node-100000001b5d3ab7

truxton-76bf94c65f-nqcdt,node-10000000e5fe589d

victory-5d695d668c-dxdd8,node-100000008d83d984

!!

Longhorn Setup

Here’s my longhorn setup script:

sandy@bunsen:~/k3s$ cat longhorn.sh

#!/bin/bash

. ./functions.sh

kubectl apply -f https://raw.githubusercontent.com/longhorn/longhorn/v1.9.1/deploy/longhorn.yaml

kubectl apply -f longhorn_IngressRoute.yaml

https_wait https://longhorn

USERNAME=myo

PASSWORD=business

CIFS_USERNAME=`echo -n ${USERNAME} | base64`

CIFS_PASSWORD=`echo -n ${PASSWORD} | base64`

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Secret

metadata:

name: longhorn-smb-secret

namespace: longhorn-system

type: Opaque

data:

CIFS_USERNAME: ${CIFS_USERNAME}

CIFS_PASSWORD: ${CIFS_PASSWORD}

EOF

kubectl create -f longhorn_BackupTarget.yaml

sandy@bunsen:~/k3s$ cat longhorn_IngressRoute.yaml

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: longhorn

namespace: longhorn-system

spec:

entryPoints:

- websecure

routes:

- kind: Rule

match: Host(`longhorn`)

priority: 10

services:

- name: longhorn-frontend

port: 80

tls:

certResolver: default

sandy@bunsen:~/k3s$ cat longhorn_BackupTarget.yaml

apiVersion: longhorn.io/v1beta2

kind: BackupTarget

metadata:

name: default

namespace: longhorn-system

spec:

backupTargetURL: "cifs://192.168.1.1/sim/longhorn_backup"

credentialSecret: "longhorn-smb-secret"

pollInterval: 5m0s

I also added a BackupTarget pointed at my main samba server – and that needed a login secret (and took another node roll sequence to add because I forgot to add the cifs tools when I first added the nfs-common packages).

-Sandy

Leave a Reply